This page describes the current technical stack I use to produce the blog. I intend to keep this up to date as I make technology changes as a sort-of freeform changelog.

Web

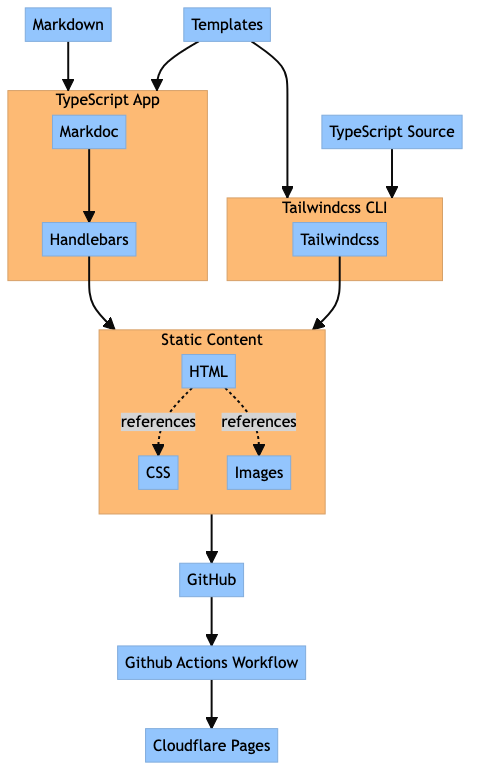

The source for this blog is statically generated HTML, CSS, and images, served via Cloudflare Pages. The pages use a minimal amount of JavaScript to provide some non-critical interactivity. This means that if you have JavaScript disabled, the content and style should not be impacted.

I author the content for the blog posts and pages in Markdown and use Markdoc to render that content into HTML. This HTML is then combined with a handful of Handlebars templates and rendered to HTML to produce the complete HTML content for each page.

The HTML is styled with Tailwindcss, configured with the very important Typography plugin. Tailwindcss works really well with both Markdoc and Handlebars, and I can just focus on producing the appropriate HTML with the desired styling encoded in the class attribute of each HTML element. The following is the only CSS source I have under source control:

@tailwind base;

@tailwind components;

@tailwind utilities;

Source code blocks, like the above, are styled at build time using PrismJS.

This whole process produces a single directory that can then be given to any of the platforms that support static sites. I went with Cloudflare Pages as it offers:

- free unlimited bandwidth

- free custom domain TLS

- GitHub integration with private repos

- Setting HTTP header fields for specific URLs

- free, privacy-preserving analytics via Cloudflare Web Analytics

Human-friendly URLs

The publishing process derives the URL for each page from some metadata contained in the Markdown files. These URLs do not end with a .html extension. For example, the URL for this page is https://www.mcnulty.blog/pages/stack.

As Cloudflare Pages determines the Content-Type for the HTTP responses from the file extension, to serve the correct HTML Content-Type for these pages, I unconditionally set the Content-Type for the directories that contain the HTML pages via the custom headers feature.

Diagram Rendering

I either use mermaid or PlantUML to create the diagrams in the posts. The source for the diagrams is written inline with the Markdown content, using VS Code's live preview support to get quick feedback. Both software packages have pros/cons so I tend to pick the package to use based on the requirements for each diagram.

Mermaid

I manually render the mermaid diagrams as images using mermaid-cli and commit those images along with the Markdown content.

This approach allows me to render mermaid diagrams once instead of rendering them at page load in each visitor's browser with JavaScript or every time I generate the blog content. This ensures that as the blog size grows, I don't continue to pay the cost for rendering diagrams that do not change. However, I do make the trade-off that the diagram in the Markdown content may become stale relative to the image under source control if I forget to manually regenerate them.

PlantUML

I use a locally-hosted PlantUML server to render my diagrams. My editor is also set up to use this server for rendering live previews. Like the mermaid diagrams, these are commit to source control along the Markdown content.

Automatic Publishing with Github Actions

The source for the blog is hosted in a private GitHub repository. The TypeScript app and tailwindcss CLI are invoked by a Github Actions workflow for each commit to produce a single directory with HTML, CSS, a small amount of JS, and images.

If a commit is tagged with a tag name starting with v, this directory is then committed to a dedicated deploy that shares no history with the main branch. Cloudflare Pages is configured to only deploy from the deploy branch.

The workflow step is something like the following:

- name: Add artifacts to deploy branch

if: ${{ startsWith(github.ref, 'refs/tags/v') }}

run: |

mv dist next-dist

ls -l

git config --global user.name '${{ github.actor }}'

git config --global user.email '${{ github.actor}}@users.noreply.github.com'

git remote set-url origin https://x-access-token:[email protected]/$GITHUB_REPOSITORY

git fetch origin

git checkout -b deploy refs/remotes/origin/deploy

ls -R dist next-dist || true

rm -rf dist

mv next-dist dist

git add dist

git commit -a -m 'deploy: ${{ github.sha }}'

git push origin deploy

I've used this pattern on a handful of static sites, and it's pretty slick. The deploy branch provides a nice sequence of diffs for the published changes while allowing me to fumble around on the main branch as I get the content in the correct state for publishing. It also allows me to use a self-hosted runner to avoid exceeding the free limits of either Github Actions or the static site provider's less featureful CI/CD offering. This pattern does require some initial set up to create the deploy branch as an orphan branch.

There is an email version of this blog that I send out via Buttondown. The content for the emails are generated from the same Markdown content as the web version, using a different TypeScript app that:

- Uses Markdoc to parse the Markdown

- Transform the resulting Markdoc AST to remove any Markdoc-specific syntax and extensions

- Format the Markdoc AST back into Markdown

- Output the post as a single Markdown file

Currently, I take this Markdown file, paste it into the Buttondown console and press send. Buttondown has an API that I could integrate into the GitHub Actions workflow to automate this process, but that would require moving up to the Standard tier of Buttondown. In the event that the readership of this blog necessitates upgrading my Buttondown account, I'll definitely look to automate this process. But, that's a success problem I don't currently have 😉.